Imagine an AI that allows you to describe anything you want drawn in plain English, and it will generate an image of it with the skill of an actual talented artist. How far away do you think we are from such technology? Impossible? 100 years away? 10 years away? If you answered "that already exists today", you are correct! I'm talking about DALL·E 2. Currently, it's only being rolled out to a limited number of people, but it will be made public someday. For now, you can sign up to be put on their waitlist if you want.

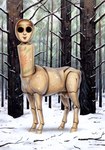

Let's put it to the test right here and now: for each of these images, cover up the bottom-right to obscure any watermarks and try to determine if a human or an AI made it. You can then look for the watermark consisting of 5 colored squares to see which were generated with DALL·E 2.

https://imgur.com/a/oAdgnQB

https://imgur.com/a/s2Xxfn4

https://imgur.com/a/AQOhNfP

https://imgur.com/a/QK12zO3

https://imgur.com/a/v2Ok6GJ

https://imgur.com/a/j6WcoPL

https://imgur.com/a/5Kvuq2s

https://imgur.com/a/qpfjLVS

Luckily for furry artists, DALL·E 2 does have some obvious limitations:

- It's terms and conditions forbid using it for creating pornnographic or violent images

- From what I can tell, it's often bad at generating eyes

- You can't give it a ref sheet of your original character

- It usually can't form text with non-gibberish words (the "No T-rexans" one kinda making sense was just a fluke)

- It generates images, not animations or comics

There's also a competitor "Midjourney", which I know a lot less about, but it might be just as good.

How do you guys think this new technology will affect furries and the rest of the world? Did all artists just get completely screwed over? Is it a tool that could help artists? Is it going to be used professionally in the future, or just for memes and such?

https://www.reddit.com/r/dalle2/

EDIT:

While the public beta is free (if you're lucky enough to get your hands on it... the waitlist is long), it will almost certainly be a paid service once released publicly. Pricing per usage is yet to be determined.Pricing for those who have access to the beta starts at $15 for 115 credits, plus you get 15 free credits each month plus 50 free credits to start out with. 1 usage = 1 credit.- The public release is probably coming "soon"

Updated by NotMeNotYou